Rate-limiting is an effective and simple technique to protect APIs against malicious or unintentional overuse. Without a rate limit, anyone can bombard a server with requests and cause spikes in traffic that eat up resources, “starve” other users, and make the service unresponsive.

This article is an intro to rate limiting and the importance of restricting the number of requests that reach APIs and services. Rate limits and their workings are explained, as well as the various algorithms that you can use for rate limiting. Additionally, approximately 31% (around 5 billion) of all malicious transactions targeted APIs, which should place securing this attack vector at the top of an organization’s to-do list.

What Is Rate Limiting?

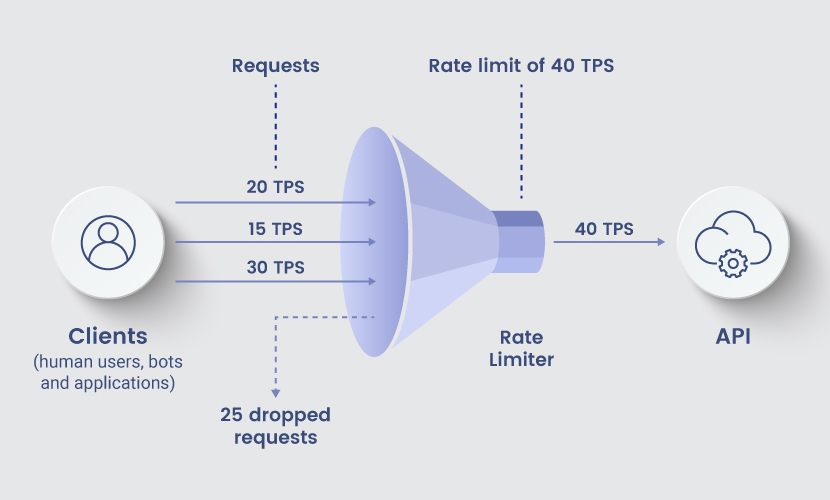

Rate limiting is the practice of restricting the number of requests users can make to a specific API or service. You can limit how many times users can perform an action, such as logging into an account or sending a message. If someone reaches their limit, the server begins rejecting additional requests.

Rate limiting is both a cybersecurity precaution and a key part of software quality assurance (QA). Companies use rate limits to:

Prevent a large number of requests from overwhelming a web or application server.

- Ensure all users have equal access to the API and that no one consumes too much bandwidth, data storage, or memory.

- Stop human users, bots, and applications (anyone or anything with the ability to issue calls to an API) from abusing a web property.

- Prevent different kinds of malicious bot activity (namely DoS/DDoS and brute force attacks).

Technically, rate limiting is a form of traffic shaping. Social media messaging is the most common example. Rate limiting kicks in if someone decides to send a thousand messages to other profiles. If someone decides to send a thousand messages to other profiles, rate limiting kicks in and stops the user from sending messages for a certain period.

Learn the most effective ways to prevent DDoS attacks and stay a step ahead of would-be hackers trying to overload your server with fake traffic.

Why Is Rate Limiting Important?

Here’s a list of the main reasons why rate limiting is an essential aspect of any healthy service:

- Preventing overloads: Too many requests can overwhelm a server, causing it to slow down or even become unresponsive. Limiting the number of requests a server or API processes helps maintain the performance and availability of your service.

- Ensuring fairness: Rate limiting prevents any user from monopolizing resources at the expense of others. Placing a cap on allowed requests gives everyone a fair opportunity to use the service.

- Managing costs: If there’s a cost associated with each request made to the service, rate limiting is essential to controlling expenses and ensuring efficient use of resources.

- Usage metering: If a user signs up for a plan that allows, for example, 1000 API requests per hour, rate limiting ensures the client stays within the set cap.

- Controlling data flow: Rate limiting enables an admin to control data flows, which is key for APIs that process large volumes of data. For example, you could distribute data evenly between two APIs by limiting the flow into each element.

- Protecting against malicious activity: Rate limiting is vital to preventing several types of cyberattacks. This practice can be used to counter denial-of service attacks, brute-force attacks and credential stuffing. The rate-limiting mechanism typically tracks two factors:

The users’ IP addresses that are sending requests.

How long it takes between requests.

- The Transactions Per Second (TPS) is the main metric used to set rate limits. Rate limiting will stop the server from responding if a single IP address sends too many requests in a short period of time (i.e. goes over their TPS limit). The user receives an error message, and cannot send any more requests until the timer is reset. Rate limiting relies on a throttling system that blocks or slows requests. Admins implement rate limiting on the server or client side, depending on which strategy better fits the use case:

- Server-side rate limiting is more effective at preventing overload and stopping malicious activity.

Client-side rate limiting is better at managing costs and ensuring fair use of resources.

Many admins also set rate limits based on usernames. This approach prevents brute force attackers from attempting to log in from multiple IP addresses.

- Types of Rate Limits

- Let’s look at the different types of rate limits you can use to control access to a server or API. You can combine the different types to create a hybrid strategy. For example, you may limit the number of requests based on both IP addresses and certain time intervals.

Time-Based Rate Limits

Time-based rate limits operate on pre-defined time intervals. For example, a server may limit requests to a certain number per time period (such as 100 per minute).

Time-based rate limits typically apply to all users. You can set these limits to be either fixed(timers count down regardless of when and if users make requests) orsliding (the countdown starts whenever someone makes the first request).

Geographic Rate Limits

Geographic rate limits restrict the number of requests coming from certain regions. These caps are a great choice for location-based campaigns. Admins get to limit the requests from outside the target audience and increase availability in target regions.These rate limits are also good at preventing suspicious traffic. You could, for example, predict that users are less active in a particular region between 11:00 PM to 8:00 AM. You set a lower rate limit for this time, which further constraints any attacker hoping to cause problems with malicious traffic.

User-Based Rate Limits

User-based rate limits control the number of actions individual users can take in a certain time frame. For example, a server may limit the number of login attempts each user can make to 100 per day.

User-based limits are the most common type of rate limiting. Most systems monitor the IP address of the user or their API key (or even both). This type of rate-limiting requires that the system maintain usage statistics for each user. Such setup often leads to operational overhead and increases overall IT costs.

Concurrency Rate Limiting

Concurrency rate limits control the number of parallel sessions the system allows in a certain time frame. For example, an app might prevent more than 1000 sessions within a minute.

Server Rate Limits

Server rate limiting helps admins share a workload among different servers. If you have a distributed architecture that has five servers, for example, you can use a rate limitation to set a cap per device. Such a strategy is vital to achieving high availability and preventing DoS attacks that target a specific server.

API Endpoint-Based Rate Limiting

These rate limits are based on the specific API endpoints users are trying to access. For example, an admin may limit requests to a specific endpoint to 50 per minute, either due to security or overloading concerns.

Learn about endpoint security and see what it takes to keep devices at the network’s edge safe from malicious activity.

Rate Limiting Algorithms

Here are the most common algorithms companies rely on to implement rate limiting:

Token bucket:

Each time a user makes a request, the system removes a token from the so-called token bucket. Once the bucket is empty, the user cannot make further requests until a refill resets the number of session tokens.

Greedy token bucket:

This algorithm allows users to accumulate unused tokens and build up a bigger bucket. Users who don’t use their token quota fully will have to make more requests in the future. The system will drop all requests if the bucket becomes full (i.e. users have reached the limit). The leaky bucket is easy to implement on a load balancer and is highly memory-efficient.

Fixed window:

- This algorithm limits the number of requests users can make within a fixed time window (typically either a minute or an hour). The server might only be able to serve 100 requests from 11:00 AM until 11:01AM. At 11:01 am, the window resets.Rolling window:

- Instead of using a fixed time window like the previous algorithm, this method relies on a rolling window. Time frame starts only when the user submits a new request. For example, if the first request arrives exactly at 10:15:48 and the rate limit sits at 20 per minute, the server will allow 19 more requests until 10:16:48.Sliding log:

- This algorithm requires the system to maintain a time-stamped log (set or table) of requests for every individual user. The system sums up the logs in order to calculate the rate of requests. The main factors that you should consider when selecting a rate-limiting method are the API’s unique requirements and the traffic volume. Your method of choice must prevent overload and stop malicious activity but also ensure legitimate users use the service without interruptions.

How To Implement Rate Limiting?

- Below is a step-by-step guide to implementing rate limiting (although the exact way you set limits depends on your specific tech stack):Determine rate limit rules:

- Decide the specific rate limit you’ll use. Decide on the type of limit you’ll use (time-based or request-based). Select a rate-limiting algorithm. The token bucket works well for user-based rates. Likewise, the fixed window is excellent for time-based rate limits.Decide where to implement the rate limit:

- Decide whether the team should implement rate limiting on the server or client side (or both).Implement the rate limit:

Next, deploy the chosen rate limiting algorithm. The rate limiting algorithm is then deployed. Simulate different traffic situations using a combination of automated and manual testing. Test the system periodically and adjust the rules as needed to keep performance levels up.

Implementing rate limiting is a simple process for most use cases. For example, if you’re using Nginx as a web server and wish to set a rate limit at the server level, you’ll use the ngx_http_limit_req_module module.

A simple, yet highly effective defensive practice Rate-limiting is crucial for both the security and quality your APIs and apps as well as websites. If you don’t limit the number requests, you are vulnerable to traffic-based threats and poor performance, which can lead to higher bounce rates, customer retention issues, etc. Setting a rate-limit is an easy decision to make for most cases, especially given how simple it is.