The mention of Vagrant might have made you think that this was yet another article on the power of sharing applications environments. Vagrant facilitates this approach, as one would with code. There is a lot of information on this topic and the benefits are well known. We will instead describe our experience using Vagrant in a slightly unusual way. We wanted to remove certain limitations or pain points in development. The choice of OS on the local workstation for the developer is what causes these pain points or restrictions. This could be a requirement from an organization, a regulatory mandate, or anything else that is out of the developers’ control. Yes, we also considered the possibility of replacing host OS. However, we found that the way technologies came together in this approach can be quite powerful.

We’ll take this opportunity to communicate some of the lessons learned and limitations of the approach and share some ideas of how certain problems can be solved.

Why Vagrant?

The problem that we were trying to solve and the concept of how we tried to do it does not necessarily depend on Vagrant. The idea behind the project was to deploy a virtual machine on a hypervisor locally. At first, running the VM locally may seem a bit strange. However, as we found out, this gives us certain advantages that allow us to create a better experience for the developer by creating an extension to the workstation.

We opted to go for VirtualBox as a virtualization provider primarily because of our familiarity with the tool and this is where Vagrant comes into play is one of the tools that make up the open-source

Suite, which is aimed at solving the different challenges in automating infrastructure provisioning.

In particular, Vagrant is concerned with managing VM environments in the development phase Note, for production environments there are other tools in the same suite that are more suitable for the job. Terraform and Packer are two tools that use configuration as code. It means that an environment is easily shared among team members, and all changes can be tracked and version controlled. Vagrant is opinionated and therefore declaring an environment and its configuration becomes concise, which makes it easy to write and understand. Vagrant is opinionated and therefore declaring an environment and its configuration becomes concise, which makes it easy to write and understand.Read more about the effects of VMs on development in our blog post

DevOps and Virtualization.

Why Ansible?

After settling on using Vagrant for our solution and enjoying the automated production of the VM; the next step was to find a way to provision that VM in a way that marries the principles advertised by Vagrant.

We do not recommend having Vagrant spinning up the VMs in an environment and then manually installing and configuring the dependencies for your system. Vagrant’s core

provisioners are many and varied. As long as provisioning was simple, we continued to use Shell (Vagrant uploads scripts and executes them on the guest OS). The main problem was that developers had to write their code in a way which favored idempotency. It is common to need to add configuration steps. It would be overkill to have everything provisioned from scratch.

At that point, we chose to use. Ansible, by RedHat, is an open-source automation software that manages the execution of play. Using a playbook where a play can be thought of as a list of tasks mapped against a group of hosts in an environment.

These plays should ideally be idempotent which is not always possible. The entire configuration that one would write in YAML is also declared. This strategy has been a huge success because the community is doing the heavy lifting. Ansible Modules are configurable Python scripts for performing specific tasks. It is available for almost anything. It is now very simple and quick to install dependencies and configure the guest in accordance with industry standards. Modules are generally highly opinionated, so the developer does not need to get into the details. The integration between Vagrant and these concepts works seamlessly. Ansible does not work from Windows control machines, even though it is getting more and more support to manage Windows targets. We have two options. Either we can create a second environment that acts as an Ansible Controller, or we can use the guest VM to run Ansible and provision it. This was a compromise we were willing to make because the alternative would have been cumbersome. You can achieve this with Vagrant by replacing the provisioner’s identifier. File SharingWe wanted to be able to access the local workspace from the guest OS. It is to make the tools that comprise a working environment readily available for running builds within the guest. There are many ways to solve this problem, and the solutions vary depending on your use case. VirtualBoxs two-way file sharing functionality is the easiest way to go. It provides near-instant synchronization. And setting it up is a one-liner in the VagrantFile.The main objective here was to share code repositories with the guest. This can be useful to duplicate configuration for other toolings. For instance, one might find it useful to configure file sharing for Maven`s user settings file, the entire local repository, local certificates for authentication, etc.

Port Forwarding

VirtualBox`s networking options were a powerful ally for us. VirtualBox’s networking options were a powerful ally for us. We were able to rely on the host-only network. The VM can only be reached from the host. This allows you to configure software that is running locally and inside the guest without having to change configurations. This can all be done in Vagrant with just one line of code. This NATting can be configured in either direction (host to guest or guest to host).AnsibleBringing it together

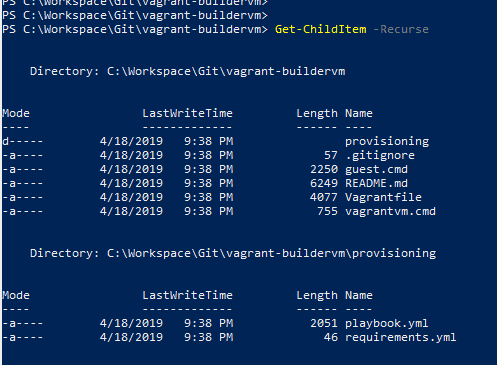

Having defined the foundation for our solution, let’s now briefly go through what we needed to implement all of this. The Vagrantfile is where we define the base OS image (we chose CentOS 7).

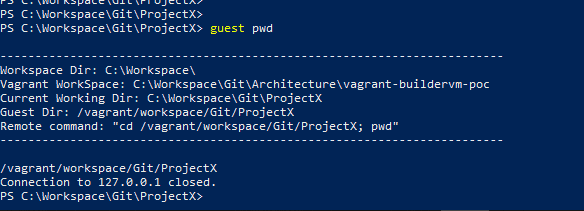

Figure 1: File structure of the solution

Note that the vagrant plugin `vagrant-vbguest` was useful to automatically determine the appropriate version of VirtualBox’s Guest Addition binaries for the specified guest OS and installing them. We also opted to configure Vagrant to prefer using the binaries that are bundled within itself for functionality such as SSH (VAGRANT_PREFER_SYSTEM_BIN set to 0) rather than rely on the software already installed on the host. For this, we chose to use Vagrant’s ansible_local that works by installing Ansible in the guest on the fly and running provisioning locally. For this we opted to leverage Vagrant’s ansible_local that works by installing Ansible in the guest on the fly and running provisioning locally.

Now, all that is required is to provide an Ansible playbook.yml file and here one would define any particular configuration or software that needs to be set up on the guest OS.

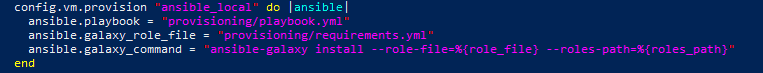

Figure 2: Configuration of Ansible as provisioner in the VagrantFile

We went a step further and leveraged third-party Ansible roles instead of reinventing the wheel and having to deal with the development and ongoing maintenance costs.

The Ansible Galaxy is an online repository of such roles that are made available by the community. And you install these by means of the ansible-galaxy command.

Since Vagrant is abstracting away the installation and invocation of Ansible, we need to rely on Vagrant. Why? This is to ensure that these roles will be installed and available for use when the playbook is executed. The galaxy_command parameter is used to achieve this. This can be done in the most elegant manner by passing a requirements.yml with a list of required roles to the ansible galaxy command. Finally, we need to make sure that the

Ansible files are made available to the guest OS through a file share (by default the directory of the VagrantFile is shared) and that the paths to them are relative to /vagrant.

Building a seamless experience…BAT to the rescue

We were pursuing a solution that makes it as easy as possible to jump from working locally to working inside the VM. We also wanted to be in a position to switch between the two without needing to change windows. We wanted to take advantage of the fact that the workspace directory on the guest VM was synchronized. The current host location allowed us to determine the path of the workspace in the guest. For example, if on our host we are at C:WorkspaceProjectX and the workspace is mapped to vagrantworkspace, then we wanted the ability to easily run a command in vagrantworkspaceprojectx without having to jump through hoops.

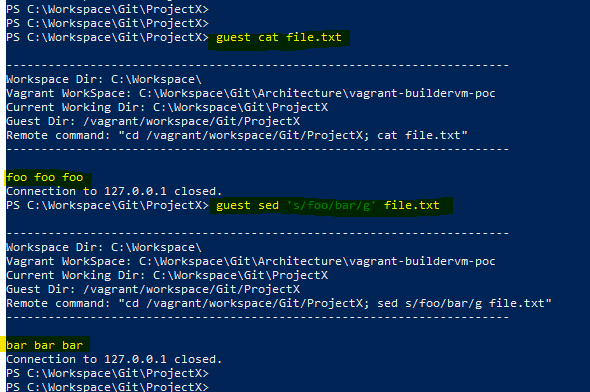

Figure 3: Illustrating how the path is resolved on the guest

Figure 4: Running a command in the guest against files in the local workspace

We also added the ability to the same script to SSH into the VM directly in the path corresponding to the current location on the host. On VM provisioning, we created a file-share that allowed us to sync up the bashrc folder in the vagrant home directory. This allows us to cd in the desired path (which is derived on the fly) on the guest upon login.

This is standard Vagrant functionality that is made possible by setting the %VAGRANT_CWD% variable. We added the option to permanently define it in a user variable. And simply set it up only when we wanted to manage this particular environment.

Figure 5: Spinning up the VM from an arbitrary path

The problems revolved around the file-sharing mechanism. The approach may not work for some situations that require intensive File I/O. It was easy to start with a simple VirtualBox file sharing, since it worked. It syncs instantly in both directions without any configuration, which is great for most situations. Soft-linking is not very effective because it requires that a Windows host be given a certain privilege. RSync, which syncs by default in only one direction and is run on demand, was used to get around this problem. Again, there are ways to make it poll for changes and bi-directionality could theoretically be set up by configuring each direction separately.

However, this creates a race-condition with the risk of having changes reversed or data loss. SMB shares required more effort to setup and were not fast enough for us. This required changes to our source code repository, which was not ideal. Also, due to the huge number of dependencies involved in one of our typical NPM-based FrontEnd builds, the intense use of File I/O was causing locks on the file share, slowing down performance.

The aim was to extend a workstation running Windows by also running a Linux Kernel, to make it as easy as possible to manage and switch between working in either environment. The end result from our efforts turned out to be a very convenient solution in certain situations.